Agent memory must be treated as a first-class, governed database

AI runs on data. As leaders pushing for efficiency and innovation, there’s one fundamental thing to understand: memory isn’t just a feature in your AI systems, it’s the foundation of how they learn, adapt, and take action.

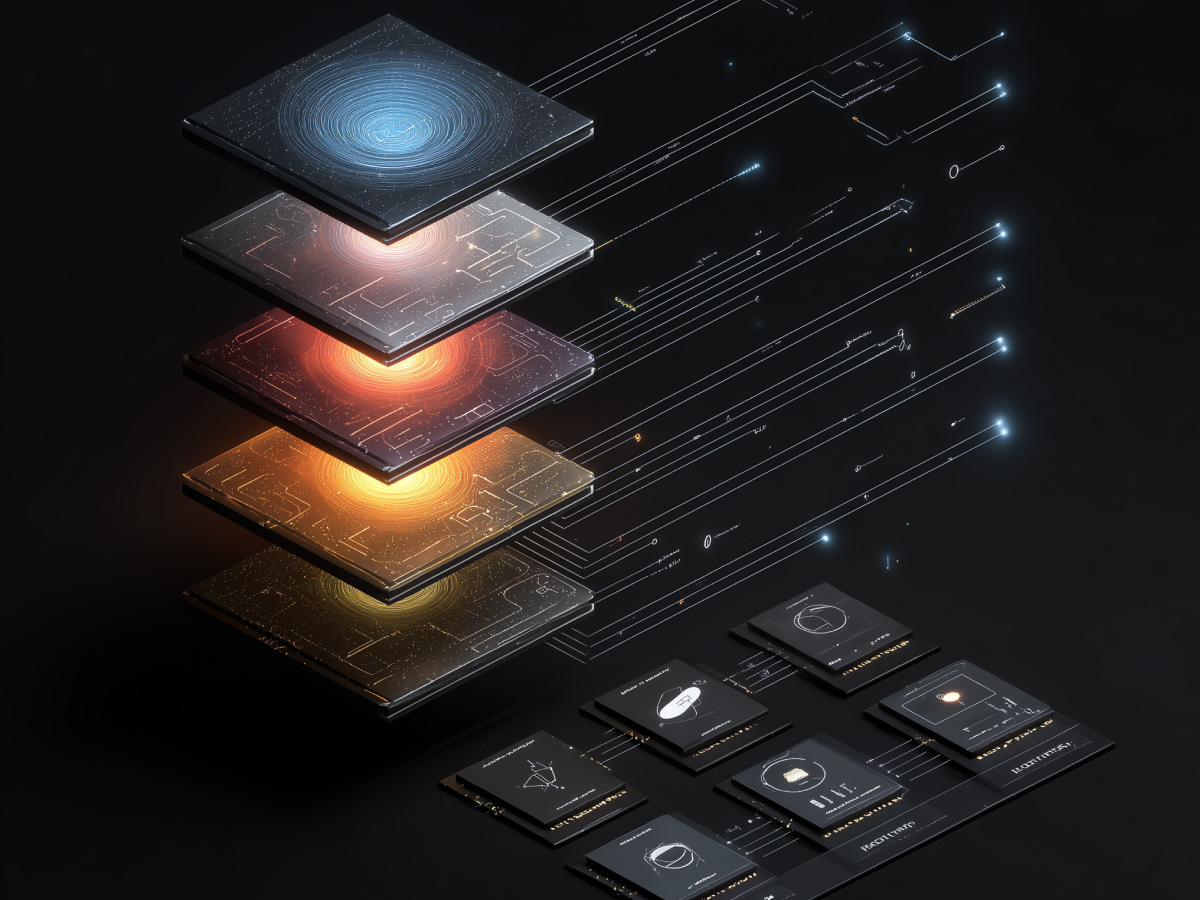

We’ve entered a new cycle where AI agents aren’t just answering pre-set queries. They’re autonomous. They remember. They learn from what they’ve done and apply it across new contexts. But memory in most current AI tools is treated like a disposable notepad. That has to change. Memory is now the core database, alive, persistent, and operational. It needs structure, version control, access policies, and auditing, just like any financial system or customer database you care about.

Richmond Alake calls this shift “memory engineering.” He draws a line between an LLM’s short-term memory, just stored weights and a temporary context window, and an AI agent’s memory, which is meant to persist across sessions, take on nuance, and evolve over time. That kind of memory is a moving data stream, it grows, changes, and influences what your AI does next.

If you don’t govern that stream, your AI will develop blind spots, repeat errors, or even manufacture them. Worse, it will do this confidently, based on whatever distorted information is baked into its memory. For business, this creates a critical risk, an artificial decision-maker acting on corrupted, outdated, or unauthorized data. You wouldn’t allow that in your ERP system. You can’t afford it here either.

Start with the assumption that memory is just another enterprise-grade database. Give it schema. Track who inputs and accesses what. Audit everything.

Poorly governed agent memory introduces three major security risks

Security is never static, especially with AI agents. We’re at a point where these systems can access internal tools, databases, and role-based workstreams, often in real time. That’s why unmanaged memory is more than a cleanup problem. It’s a direct security threat.

There are three major risks here, and none of them are theoretical.

The first is memory poisoning. This is not hacking in the traditional sense, no firewalls are broken. Instead, an attacker uses regular inputs to teach your AI something false. Once stored in memory, that misinformation becomes part of the system’s belief. And from there, every downstream action is influenced. OWASP has flagged this class of threats. Tools like Promptfoo now exist just to red-team these systems, challenging the AI’s memory layer to see if it can be manipulated.

Next is tool misuse. As agents are enabled to call APIs, access databases, or run commands, they might be coerced, subtly, into making bad decisions with valid credentials. For example, an attacker can trick the agent into querying a production database using an approved tool but in an unapproved context. It’s not a breach. It’s authorized misuse. That’s harder to prevent and harder to detect.

Finally, you’ve got privilege creep. Over time, agents accumulate knowledge. Today it supports the CFO. Tomorrow it assists a junior analyst. The problem? It remembers both sessions. Meaning it may start to pass info between roles, unintentionally exposing data it was never meant to share. This is already mentioned explicitly in AI security taxonomies: agents don’t forget, unless you tell them how.

For executives, know this, memory in these systems is not just about functionality. It’s an attack surface. Every uncategorized or unstructured memory record is a risk multiplier. The more access the system has, the higher the stakes.

You need to define how your agents store memory, how they recall it, and when access gets revoked. And it should be managed with the same operational discipline you’d expect from any tier-one corporate system. No exceptions.

Existing enterprise data governance models can and should be extended to govern agent memory effectively

You’ve already built the systems to manage data trust. Now’s the time to use them.

Enterprises know how to govern sensitive information, customer records, financials, HR data. These systems have schemas, access roles, lineage tracking, retention schedules, audit trails. All of that exists for a reason. Your AI agents don’t need a new security architecture. They need to inherit the one you already trust.

The current AI trend is shifting from speed-focused deployment to structured, auditable platforms. The C-suite conversation is changing. It’s not “How fast can we launch the agent?” It’s “How fast can we make sure its data is governed?” That’s not an annoying constraint. That’s what keeps your AI from being an open vector for legal, operational, and reputational exposure.

Agents operate at speed. They process data faster than human teams. If you feed them stale, mislabeled, or siloed data, they’ll amplify every flaw. The risk isn’t theoretical. These agents don’t pause to ask whether the data is right, they take action based on what they learn. And once that memory is formed, they carry it session to session.

If your governance ends at the data warehouse, you’ve already got blind spots. AI systems must follow the same classification, retention, and access policies as everything else. No shortcuts. No special lanes.

Many developers unintentionally create “shadow databases” with unmanaged memory storage in AI agents

Most teams don’t build insecure AI systems on purpose. But that’s what ends up happening.

A lot of default AI frameworks ship with their own local memory layers, vector databases, JSON files, in-memory caches. They offer quick setup, but they come loaded with gaps: no schema enforcement, no access control, no versioning, no visibility. These shadow databases grow fast and silently, operating outside every policy you’ve already built for secure digital infrastructure.

The problem scales fast. Copy a prototype into production, and suddenly your agents are managing live decisions based on storage no one controls or monitors. You’ve created another data silo, but worse, it’s dynamic, opaque, and full of unverified assumptions. That means it can’t be trusted, audited, or secured with confidence.

For decision-makers, this isn’t about slowing down developers. It’s about preventing misalignment between what the system knows and how that knowledge is managed. Your security teams can’t govern what they can’t see. Shadow memory breaks that chain.

If your agent is influencing customer experiences, making decisions, triggering actions, and improving over time, it’s a production system. Its memory must live inside the governed stack.

The goal is simple: visibility, accountability, containment. If you’re not applying enterprise-grade data policies to agent memory, you’re already behind.

Database practices should be applied directly to the design and governance of agent memory

AI memory is executable data. It shapes what an agent knows, recalls, and acts on. That means standard database principles, structure, access control, validation, and traceability, aren’t optional. They are baseline requirements if your agents are going to be reliable.

The memory layer must be structured. You don’t throw logs, numbers, and decisions into a flat file and call it a system. Treating memory as unstructured text is a liability. Every memory should contain metadata: who created it, when, how confident the system is in its accuracy. You wouldn’t accept unlabeled financial transactions. Don’t accept unlabeled thought processes.

Next, your memory pipeline needs a firewall. Inputs into long-term memory should pass through strict validation: schema checks, consistency rules, and scanning for security threats like prompt injection or memory poisoning. This should be enforced at the memory-entry point, not after a breach has occurred.

Then there’s access control. Don’t rely on prompt logic to gate who sees what. It’s not reliable, and it won’t scale. Access policies should live at the data level. Implement row-level security so agents only retrieve memory relevant to the current user’s clearance. If an agent worked with the CFO last week, that doesn’t give it long-term rights to those insights while assisting an intern today. These boundaries must be enforced by the data layer.

Finally: audit everything. You need full traceability, what memory was accessed, when, and why. Business leaders need this visibility not just for compliance, but to debug decisions. If an agent takes an unexpected action, you should be able to see exactly which memory triggered it.

Brij Pandey makes this point clear: databases have been the backbone of secure and scalable applications for years. That doesn’t change with AI. It actually matters more now. You’re not just storing data. You’re storing operational context that drives autonomous decision-making.

Enterprises already equipped for data governance have a competitive edge

Trust in AI is often talked about in abstract terms, alignment, fairness, transparency. All important. But for enterprise adoption, trust comes down to something more operational: control.

Companies that already govern how they store, retrieve, and use information are in the best position to scale intelligent systems. Why? Because they don’t need to build new infrastructure, they just need to apply existing controls to a new type of workload. That’s not a huge leap. It’s an advantage.

If you already manage sensitive data with retention rules, role-based access, version history, and audit logs, you’re 70% of the way there. Extending that discipline to AI memory means your agents can access insights without creating liability. It also means any issue, system bugs or data flaws, can be diagnosed, traced, and resolved.

For C-suite leaders, this is key. Governance is your multiplier. It allows you to adopt powerful tools, agents that can make decisions and interact with operational systems, with confidence. Not hesitation. Not compromise.

The difference between innovation and chaos is control. Enterprises that get governance right will build faster, scale safer, and pull ahead.

Key takeaways for leaders

- Treat AI memory as core infrastructure: Agent memory isn’t temporary, it shapes outcomes. Leaders should manage it like a critical enterprise database with schema, access control, and auditability to ensure safe, reliable AI behavior.

- Anticipate and mitigate memory-related security risks: Memory poisoning, tool misuse, and privilege creep are active threats. Executives must implement strict validation, enforce access boundaries, and regularly audit agent memory to reduce exposure.

- Extend existing data governance to AI systems: Organizations already managing customer and financial data have the infrastructure needed. Leaders should apply those same governance models to AI memory to reduce risk without reinventing operations.

- Eliminate shadow memory systems: Many agent frameworks default to unmanaged storage, creating blind spots. Leaders must mandate integration of agent memory into enterprise-grade data infrastructure to maintain visibility and control.

- Apply proven database architecture principles to AI memory: AI memory requires structure, validation, and traceability. Decision-makers should enforce schema, firewall logic, row-level access, and memory trace audits to keep systems trustworthy and secure.

- Use data governance maturity as a strategic advantage: Enterprises with strong governance foundations can scale AI agents faster and safer. Leaders should view AI readiness as an extension of existing governance capability, not a separate initiative.