Traditional monolithic architecture limits agentic AI integration

A lot of companies want to build AI into their products. But most aren’t ready for it. The problem isn’t the AI, it’s the structure of the system it’s being added to. If your backend is a monolith, basically one big block of code doing everything, it won’t give AI the access it needs, or the performance it requires, to actually do useful work.

Agentic AI, which is AI that can make decisions and take action with little or no human input, needs context. And it needs control. In a monolithic architecture, APIs usually only expose the basics, what engineers needed years ago to get a webpage to work. AI needs more than that. It needs deeper access to internal logic, historical data, and system state. In most traditional systems, a lot of this is buried and inaccessible. So when you put an AI agent into that environment, it operates blindly and inefficiently.

Another issue: safety. When you expose performance-critical components of a monolithic backend, like a transactional database, to an AI agent with real decision-making power, you’re opening the doors wide. You’re giving a highly reactive system permission to bang on critical infrastructure with no guarantees about behavior. That’s a massive risk.

If you expect an agentic AI system to perform, accurately, safely, quickly, it can’t be built on outdated architecture. That’s not a software opinion, it’s a business reality.

C-suite executives need to understand that this isn’t about IT design preferences. It’s about whether your AI strategy will deliver value or fizzle out. Executive investment in AI is rising fast. But bolting an AI engine onto a brittle, closed architecture is the fastest route to failure, operationally and financially. If the system can’t adapt, the AI won’t scale, and that slows time to value.

Agentic AI leverages LLMs to autonomously drive decisions and actions

Agentic AI isn’t your chatbot answering questions. It’s a system that can observe what’s happening, make decisions based on context, and act autonomously, all powered by large language models (LLMs). That’s a big shift. Instead of waiting for human input, Agentic AI can trigger itself based on live system events, much closer to how actual teams operate in fast-moving environments.

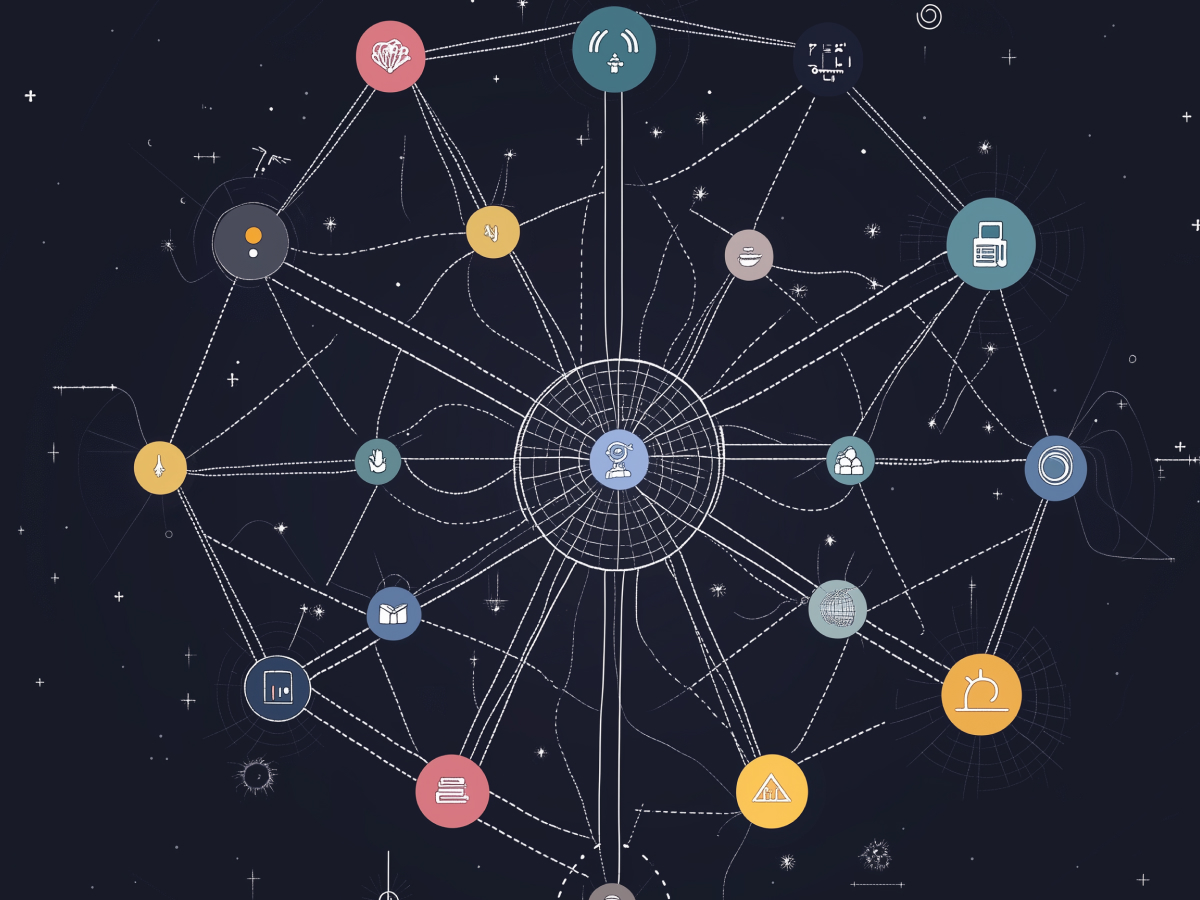

The LLM is at the core. It captures systems-level data and breaks down broad goals into precise tasks. Then, it takes action, updating records, generating responses, triggering internal workflows. But for all of that to work, the architecture behind it has to provide the right inputs: timely data, clear context, and safe access points. Once in place, you’re no longer just automating tasks. You’re creating systems that can adapt in real time and respond intelligently to complexity and change.

This kind of system can, for example, handle customer issues based on behavior analysis, without waiting on human support staff. Or adjust pricing strategy mid-stream based on competitor activity and prior outcomes. These aren’t theoretical use cases. They’re already happening inside modern platforms that understand how to operationalize AI at scale.

This matters to executives because the promise of AI, more speed, less cost, better decisions, only becomes real when the AI can operate independently. That independence demands architecture that supports autonomy, not just surface-level functionality. If your teams are still thinking of AI as a plugin or an assistant, it’s time for a mindset reset. The goal is not just automation. It’s autonomous operation backed by verifiable outcomes. This is where strategic advantage starts to materialize.

A microservices-based architecture provides the ideal foundation for agentic AI

If you’re thinking seriously about implementing autonomous AI in your systems, the place to start isn’t the model, it’s the infrastructure. Specifically, you need a microservices architecture. It breaks down your backend into focused, independent services. Each one handles a specific function and exposes its data and actions through simple, well-defined interfaces. That’s the environment an AI agent thrives in.

An agentic LLM needs access to different parts of your system to understand context, make decisions, and perform tasks. With microservices, you can control exactly what the AI can access and when. This structure also keeps performance high since each service can scale separately. If the AI is memory-hungry or data-intensive, you optimize just those services, without affecting the whole system. It also simplifies security and governance. AI gets guardrails, and your critical infrastructure stays protected.

More important than convenience is capability. With this architecture, you can plug in memory services, for both short-term and long-term recall, so the AI can reason across sessions and improve over time. That turns a static tool into a learning system that refines how it operates every time it acts.

For C-suite leaders, this might seem like a technical restructure, but the business impact is strategic. Microservices don’t just enable AI, they futureproof it. By structuring your system modularly, you’re building a business that can respond faster, scale more precisely, and adopt future technologies with far less friction. Long-term ROI in AI is driven by how well your core infrastructure supports autonomous behaviors. That support comes from microservices, not monoliths.

Event-driven design enhances AI agent reactivity and overall system scalability

In an event-driven architecture, systems communicate by sending and processing messages when something happens, like a transaction update or a user action. This setup allows AI agents to listen for the events they care about and take action instantly. Unlike a linear system that’s always waiting for commands, an event-driven setup gives your AI the data it needs at exactly the right moment.

This way of working allows multiple autonomous agents to scale and react in parallel without getting in each other’s way. You could have one AI agent listening for signs of customer churn and another optimizing sales outreach, all reacting to the same event, but in different ways. Since they’re decoupled, the system stays fast and resilient even as complexity increases.

Consider a case where an order is cancelled. The event is captured, and the AI agent picks it up. Using recent customer activity and LLM-driven sentiment analysis, the agent determines that the cancellation was due to bad reviews. It generates a personalized email with better reviews and a promotional offer, then sends it. Later, when the customer returns and places a new order, that follow-up event is captured and written to the AI’s long-term memory. The agent learns that its strategy worked, and will reuse it next time.

Executives should recognize that reactive, real-time AI isn’t about more automation, it’s about better timing. Event-driven systems give AI the structural awareness it needs to make intelligent decisions that impact key business metrics. This leads to smarter customer engagement, faster anomaly detection, and stronger feedback loops. That’s not just technical performance. That’s bottom-line impact. The faster your architecture can respond to change, the more effectively your AI can deliver business value.

Memory-driven adaptability enhances AI learning and continuous improvement

When AI is connected to short- and long-term memory services, it stops being reactive and starts becoming strategic. These memory services allow agentic AI to record what happened before, recall it later, evaluate patterns, and adjust its behavior. This isn’t just a technical capability, it’s a requirement if you expect the AI to improve over time with minimal human input.

Memory creates a feedback loop. For example, after an AI agent responds to a cancelled order with a targeted email, it can register whether the customer returns and makes a new purchase. If so, that tactic is tagged as effective and stored for future reference. Over time, the agent builds a precise understanding of what strategies work best, for which users, under which conditions. It no longer acts based only on training data. It acts based on live outcomes.

This mechanism also increases decision consistency across the system. For agents working across customer service, operations, or finance, memory services ensure that lessons learned in one workflow can inform actions in others. That broadens impact without multiplying oversight.

For executives, this capability shifts how you evaluate AI success. Instead of just measuring initial performance, you start thinking in terms of retention, compounding value, and adaptability. A system that learns from its own history can adapt faster than any rule-driven logic or static automation. That kind of responsiveness is a long-term competitive lever. And critically, it requires intentional investment in memory services, not as extras, but as core infrastructure components built into your AI deployment.

Establishing a robust microservices foundation is critical to the success of AI projects

More than 80% of AI projects don’t produce real business outcomes. Most of the time, the issue isn’t the AI model. It’s the foundation it’s built on. If your current system can’t give the AI access to the right data at the right time, if performance bottlenecks slow down reasoning, or if the architecture creates more risk than control, then the AI won’t scale, won’t adapt, and won’t deliver return.

The right architecture is non-negotiable. Microservices enable rapid access to data, clear execution paths, and modular scalability, all of which agentic AI depends on. Event-driven communication and asynchronous operations give AI systems the autonomy and responsiveness needed to handle real-world complexity. Without these, the AI’s capabilities are effectively throttled.

This isn’t about overhauling everything at once. The transition from monolithic to microservices architecture can be done incrementally. But leadership needs to commit to making the shift. Strategic investments in architectural modernization allow companies to avoid short-term patches and unlock long-term AI potential.

For the C-suite, the message is direct: investing in AI without investing in the foundational architecture is a high-cost, high-failure strategy. If your teams are deploying advanced AI on outdated systems, you’re not building future-ready products, you’re creating fragile short-term experiments. On the other hand, if you commit to architecture that supports modularity, scale, and autonomy, you’re setting the stage for durable systems that learn, improve, and outperform over time.

Key takeaways for leaders

- Rethink legacy architecture: Monolithic systems don’t support autonomous AI due to limited data access and control. Leaders should prioritize modernizing infrastructure to avoid AI underperformance and system risk.

- Enable autonomous AI with the right AI model: Agentic AI relies on LLMs capable of understanding goals and acting without human input. Use LLM-powered agents only when the architecture can deliver real-time context and safe execution pathways.

- Build with microservices by design: Microservices architecture gives AI agents access to modular, scalable services tailored to specific tasks. Prioritizing this design ensures performance, control, and safe AI deployment across systems.

- Adopt event-driven workflows: Event-driven systems allow AI agents to respond instantly to real-world signals. Leaders should use this approach to drive real-time reactions and decoupled scalability without overengineering.

- Leverage AI memory for business growth: Short- and long-term memory services turn agentic AI into a learning system that adapts at scale. Executives should invest in memory infrastructure to improve outcomes across user engagement and operations.

- Treat architecture as a make-or-break factor: Over 80% of AI projects fail due to poor infrastructure, not AI quality. Leaders must align AI investments with architectural readiness or risk wasted spend and stalled innovation.