Poor tech stack decisions hinder scalability, raise costs, and compromise security

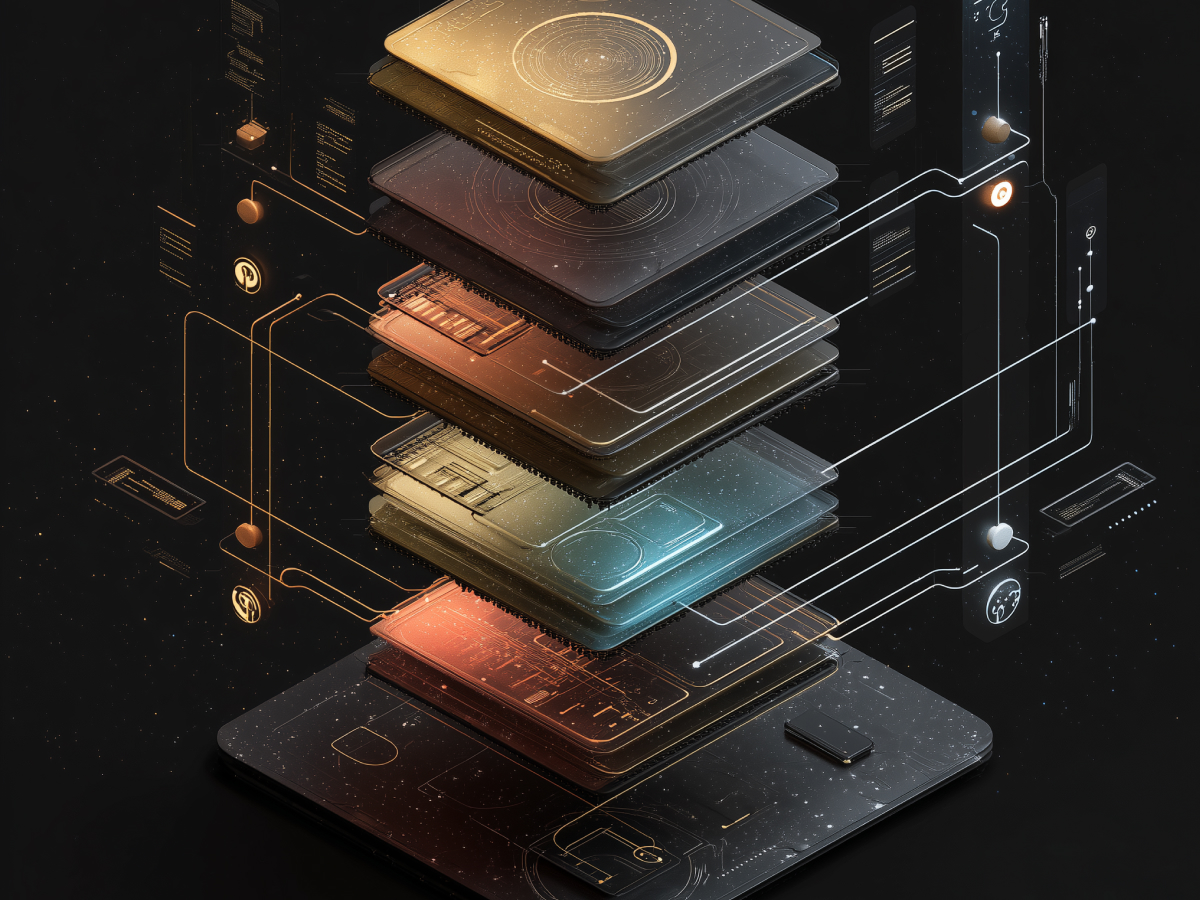

Most teams move fast in the early stages. They’re trying to prove a concept, minimize costs, and ship product. That’s fine, for a while. But if the tech stack wasn’t built to scale or wasn’t chosen with long-term survivability in mind, you’re setting yourself up for friction, not growth.

A quick-fix stack might help you get to market. It might even help you gain traction. But it can’t carry heavy workloads, adapt to new user demands, or support evolving infrastructure. The moment user adoption takes off, these short-sighted choices block your progress. Performance bottlenecks show up. Security holes become visible. Modern engineers skip your job postings because you’re working with outdated frameworks. And margins shrink because your cloud costs explode, due to inefficient architecture, not user load.

Security becomes harder to manage as you layer on patches rather than build from a solid base. Technical debt multiplies, and you end up paying in talent, time, and dollars. You can lose momentum, investor confidence, and even your top performers. These aren’t abstract risks, they’re real traps companies fall into because they optimized for speed instead of durability early on.

If the architecture doesn’t adapt, the business won’t either. So before you’re drawn to familiar tools or quick wins, stop. Ask whether this foundation supports your next stage, and the one after that.

Infrastructure decisions should align with long-term business needs

Tech infrastructure isn’t just an engineering concern, it’s a business decision. The framework you choose directly affects your cost structure, your speed to innovate, and your ability to respond when market demand spikes or realities shift fast.

Startups usually want speed and flexibility. Larger firms want stability, compliance, and operational clarity. But every company, regardless of size, needs to work with infrastructure that supports the next phase of growth without breaking. That requires clarity on your long-term goals.

If you choose a solution that’s cheap or fast to implement now, but that carries heavy maintenance costs later, you’re betting short. And hidden costs build up. Forced migrations, obscure dependencies, or the need for rare developer talent can burn time and money fast.

A good infrastructure plan should scale with demand, allow for easy updates, and offer clarity of maintenance. That means thinking beyond this quarter, this product version, this team. It means selecting tools and systems not just for what they support today, but for what they unlock a year or two down the line.

Business leaders need to be part of that decision from day one. If the tech doesn’t line up with the business model, it’s only a matter of time before friction shows up. And friction in tech is friction in operations, revenue, and growth velocity.

Future-ready infrastructure plans aren’t just about picking tools, they’re about eliminating future risk. That’s the mindset to adopt.

Prioritize technologies with vibrant developer communities and accessible talent

When you’re selecting core technologies, don’t focus only on functionality. Consider who can support it, who can build with it, and how fast they can get up to speed. A well-supported technology with an active global developer community has lasting value. It means faster onboarding, more open-source tools, quicker debugging, and access to real-time help from thousands of contributors solving problems every day.

If your infrastructure depends on a niche language or dying platform, you’ll spend valuable time searching for people who know how to work with it, if you can find them at all. And those few with the skills, they’ll demand a premium. Worse, your internal teams may lose time and interest trying to adapt. That slows releases, increases bugs, and introduces barriers to scaling into new products or markets.

There’s also a risk in dragging your team into something they haven’t bought into. Make space for their input early. If they have experience and opinions on tools that will help them execute better over time, listen. Platforms adopted with full team buy-in are adopted faster and used better. They reduce churn, increase accountability, and drive innovation.

Leaders need to make sure they aren’t choosing tools in a vacuum. Scaling is linked not only to what your systems can do, but to what your people can do with those systems at the pace the market requires.

Scalable, secure, and cloud-native solutions are essential

Scalability without security will fail. Security without scale will limit growth. The only viable long-term path is to invest in systems that are both. Cloud-native platforms make this easier by design, they’re built for distributed use, they manage scaling automatically, and they can evolve fast with business demand.

But this flexibility can come at a price. If you’re not paying close attention, cloud costs scale uncontrollably. Budgets get blown, not because the user base expanded too fast, but because the system wasn’t optimized at the architecture level. Leaders need to go beyond basic budgeting. Include FinOps professionals or build in cost-management workflows to ensure your cloud spend doesn’t drift away from business performance metrics.

Another major issue to lock down from the start is security. Don’t let it be reactive. If you’re storing customer data, facilitating payments, or running live transactions, you need built-in, end-to-end protection. This isn’t just a box to check. It’s a competitive edge and a compliance requirement. If your infrastructure can’t secure your customer base, your brand becomes the risk.

Finally, optimize with intent. Fragmented infrastructure choices limit feature development, frustrate developers, and slow release cycles. Consistent use of scalable, well-integrated, secure systems reduces noise and increases speed.

Ongoing maintenance should be cost-effective and manageable

Every system you build or buy creates a long-term support obligation. That’s not an optional part of technology strategy, it’s the core of it. Maintenance costs increase as complexity grows, and if the tech stack isn’t modern, clean, and standardized, those costs get out of control fast.

Outdated platforms, unmaintained dependencies, and patched-up workarounds all contribute to technical debt. The more you delay addressing it, the more expensive it becomes. Large teams end up dedicating more time to keeping the system afloat than pushing product improvements forward. That’s wasted capacity, and it compounds every quarter you ignore it.

Choosing technologies with strong update cycles, stable APIs, and active vendor or open-source support reduces those burdens. Systems need to evolve alongside your product and user base without creating blockers for the engineering team. This also means organizing documentation, enforcing internal coding standards, and removing architecture designed solely to meet short-term deadlines.

C-suite leaders should be thinking beyond cost-center logic when it comes to maintenance. You’re not simply absorbing operational expenses, you’re either enabling velocity or bottlenecking growth. If your teams spend more of their time maintaining than building, the system isn’t working.

Plan upgrade and migration paths early

Even the best infrastructure decisions have a shelf life. New customer needs, evolving platforms, or sudden user growth can force migrations or upgrades. If you haven’t built in flexibility from the start, these changes are risky, expensive, and disruptive.

The most capable teams aren’t those that can avoid change, they’re the ones that prepare for it. This requires clear upgrade paths built into your platform choices. It means running systems that support modular growth, allow testing without downtime, and can migrate between major vendors or environments without rebuilding everything from scratch.

Don’t assume today’s tech provider fits tomorrow’s requirements. Agreements shift, features stagnate, support dries up. Whether it’s a move from one cloud provider to another or an internal decision to re-architect key services, the systems you invest in should make those transitions smooth, not painful.

Leadership has to back this kind of preparation. It might look like overengineering up front, but what you’re really doing is protecting scale, uptime, and execution speed. When it’s time to transition, you’ll avoid disruption, not because you got lucky, but because your systems were designed to evolve.

Evolving infrastructure technologies demand modern approaches

The fundamentals of infrastructure have changed. Virtualization replaced static servers. Cloud platforms replaced physical data centers. Now Docker, Kubernetes, and scalable container orchestration are replacing yesterday’s monolithic deployment models. If you’re still relying on legacy systems, you’re slowing down your entire organization.

Modern infrastructure is built for distributed, dynamic workloads. It adapts, updates, and manages complexity with layers designed for resilience and performance. You can’t get that with outdated systems or hardware-centric thinking. Kubernetes isn’t just hype, it’s become a standard at scale because it gives engineering teams more control, better uptime, and functional separation across environments.

Enterprise systems also benefit from hybrid and multicloud strategies. This has nothing to do with trend-chasing and everything to do with flexibility and risk management. If you’re locked into one vendor, you’re exposed, not just to outages, but to pricing changes and platform limitations. Strategic diversification lets you optimize cost, meet regulatory expectations, and maintain continuity when providers fall short.

A forward-looking infrastructure strategy isn’t optional anymore. You either build for adaptability now or get forced into large, reactive changes later.

AI and ML integrations optimize operations and enhance services

Artificial Intelligence and Machine Learning are moving beyond research and prototyping, they’re infrastructure accelerators. Cloud vendors are embedding AI into core offerings, helping organizations automate more operations and predict performance issues before they happen. This isn’t theoretical, the productivity gains from AI-driven cloud management are measurable and already impacting margins.

AI-driven optimization supports real-time resource scaling, detects anomalies, and handles common support tickets via intelligent automation. It also improves systems security by analyzing behavior patterns and identifying breaches faster than manual processes can. These applications reduce both headcount inefficiencies and system downtime.

And it doesn’t stop there, ML enables predictive analytics, which helps leadership teams detect business trends based on usage data, identify market shifts earlier, and make faster product roadmap decisions. None of this works well without integration into your infrastructure from the start.

Executives should view AI not just as a feature but as a multiplier. When AI is embedded in cloud operations, outcomes improve across IT, operations, and finance. That’s not a technology trend, it’s a structural advantage.

Hybrid and multicloud models improve flexibility, cost-efficiency, and resilience

Global enterprises no longer rely on a single cloud provider to run core systems. The risk is too high, and the tradeoff isn’t worth it. Hybrid and multicloud models now play a critical role in performance, compliance, pricing leverage, and continuity of operations.

Hybrid cloud allows organizations to combine private and public environments, which is particularly relevant in sectors with strict regulatory requirements. Banking, healthcare, and government applications benefit from hosting sensitive data privately while still leveraging public cloud for scale and external-facing services. This model ensures that regulatory boundaries are met without limiting performance.

Multicloud strategies go a step further, blending services from multiple cloud vendors to optimize specific workloads. If one provider offers better data storage rates and another delivers lower latency in your core markets, use both. What matters is functional performance, not brand alignment. The added benefit is negotiating position. When you diversify your providers, you protect yourself from lock-in and gain room to navigate pricing or service changes.

Adoption timelines reinforce this momentum. Enterprises are no longer piloting multicloud, they’re scaling with it. The shift is already underway, and it’s accelerating.

Edge computing reduces latency and enhances data control, especially for IoT

As devices grow more decentralized, central cloud processing is not always fast enough. Edge computing solves a growing infrastructure need: bringing computation and storage closer to the data source. That means faster processing, reduced lag, and improved performance for applications that rely on speed and precision.

This is particularly important for Internet of Things (IoT) deployments, which often generate immense volumes of data from sensors, machines, or distributed terminals. Edge computing filters and processes that data onsite, only sending relevant outputs to the cloud. That lowers bandwidth consumption, speeds up reaction time, and conserves compute power where it matters.

Security is also a factor. Many IoT devices lack hardened security protocols. Isolating them through edge systems helps mitigate network exposure and contain threats. That keeps critical workloads insulated from more vulnerable, low-end hardware commonly found in consumer or field-deployed IoT products.

Investments in edge technology are already growing rapidly, and with good reason. Executives who structure their networks for edge-first capabilities are making real-time decisions faster, protecting more selective data, and operating with less latency drag.

Cloud services are poised for substantial growth driven by AI and hybrid models

Cloud infrastructure is entering a new growth phase. That growth isn’t just from adoption, it’s from expansion fueled by AI workloads, new service models, and increasingly diverse hybrid and multicloud deployments. Cloud is no longer about removing legacy infrastructure, it’s about enabling faster product delivery and smarter operations.

The most significant drivers heading into 2025 are Infrastructure-as-a-Service (IaaS), Platform-as-a-Service (PaaS), Software-as-a-Service (SaaS), and Desktop-as-a-Service (DaaS). Each of these segments is seeing double-digit growth, which signals that enterprises are scaling both foundational and application-level workloads across the board. AI applications, in particular, require intensive compute capacity, and cloud platforms are often the only cost-effective place to run them consistently.

Hybrid cloud setups are also pushing more organizations toward flexible deployment strategies, blending the control of private environments with the adaptability of public cloud. This adds resilience and performance optimization but also increases demand for tools that manage architecture consistently across environments.

Executives need to prepare for higher cloud budgets, not because costs are ballooning unnecessarily, but because the role of cloud is expanding. Your infrastructure is handling more processes, more data, and increasingly smarter systems. That means your budget structure should shift with your operational model.

Demand for FinOps, AIOps, and DevSecOps talent is rising sharply

Hiring for generalized tech talent is no longer enough. As cloud maturity deepens, organizations need professionals who specialize in managing financial optimization, infrastructure automation, and embedded security. These are not niche functions, they are core operational roles that drive product capability, cost stability, and compliance.

FinOps professionals help rationalize cloud budgets, provide visibility into where spend is going, and align cloud usage with revenue or unit economics. Without them, infrastructure costs can outpace growth and production velocity. AIOps engineers automate IT operations using AI, not just to monitor uptime, but to predict infrastructure anomalies before they break services. This directly supports higher availability and leaner operational headcount.

DevSecOps brings security into every part of the build pipeline, reducing vulnerabilities earlier in development and eliminating delays tied to security reviews at the end of feature delivery. These roles ensure agility doesn’t come at the cost of trust or governance.

If your teams lack these skills, you’re behind. These aren’t theoretical functions, they’re job categories with rising demand and not enough supply. Leadership should treat these roles as strategic hires, not support functions.

Strategic talent management is crucial for future-proofing technology

Technology alone doesn’t future-proof an organization. Software, infrastructure, and platforms are tools. What matters more is how your people use them, evolve them, and build with them under changing conditions. That depends entirely on the quality of your talent and how well positioned they are to lead innovation.

Organizations that attract and retain top-tier engineers, architects, and product leaders outperform. But it’s not just about hiring, it’s about how you develop and deploy that talent. Teams need the freedom to experiment, the clarity to align with business objectives, and the support to continuously upgrade their skills. This becomes even more critical as newer technologies like AI, edge computing, and distributed systems enter the mainstream. If your internal capabilities can’t keep up with these shifts, best-in-class tools won’t matter.

Culture is the lever. If your teams aren’t encouraged to collaborate across functions or move fast when conditions change, they’ll stagnate. Stagnation in engineering leads to stagnation in product, and that’s where growth flatlines. What you need is a system built around learning velocity, strategic execution, and measurable results.

Executives need to think about this deliberately. People are now the most powerful infrastructure you can invest in, because they are the ones making every other system work, scale, and evolve.

Recap

Every infrastructure choice is a long-term bet. You’re not just building for what works now, you’re shaping how fast your teams can move, how well your systems can adapt, and how much friction shows up as your business scales. Missteps don’t show up right away. But when they hit, they cost more than money, they stall execution, drain talent, and burn time you can’t get back.

Getting this right doesn’t mean chasing trends. It means building with intention. Choose tech that scales before you need it to. Prioritize security before it becomes an incident. Plan migrations before they’re forced. And invest in talent before the market beats you to it.

The companies that win aren’t just the ones with the best tools. They’re the ones whose infrastructure lets them move fast, stay reliable, and keep pace with a world that doesn’t slow down. If your systems and people are set up to evolve together, you’re already ahead.