Efficient, scalable cloud computing

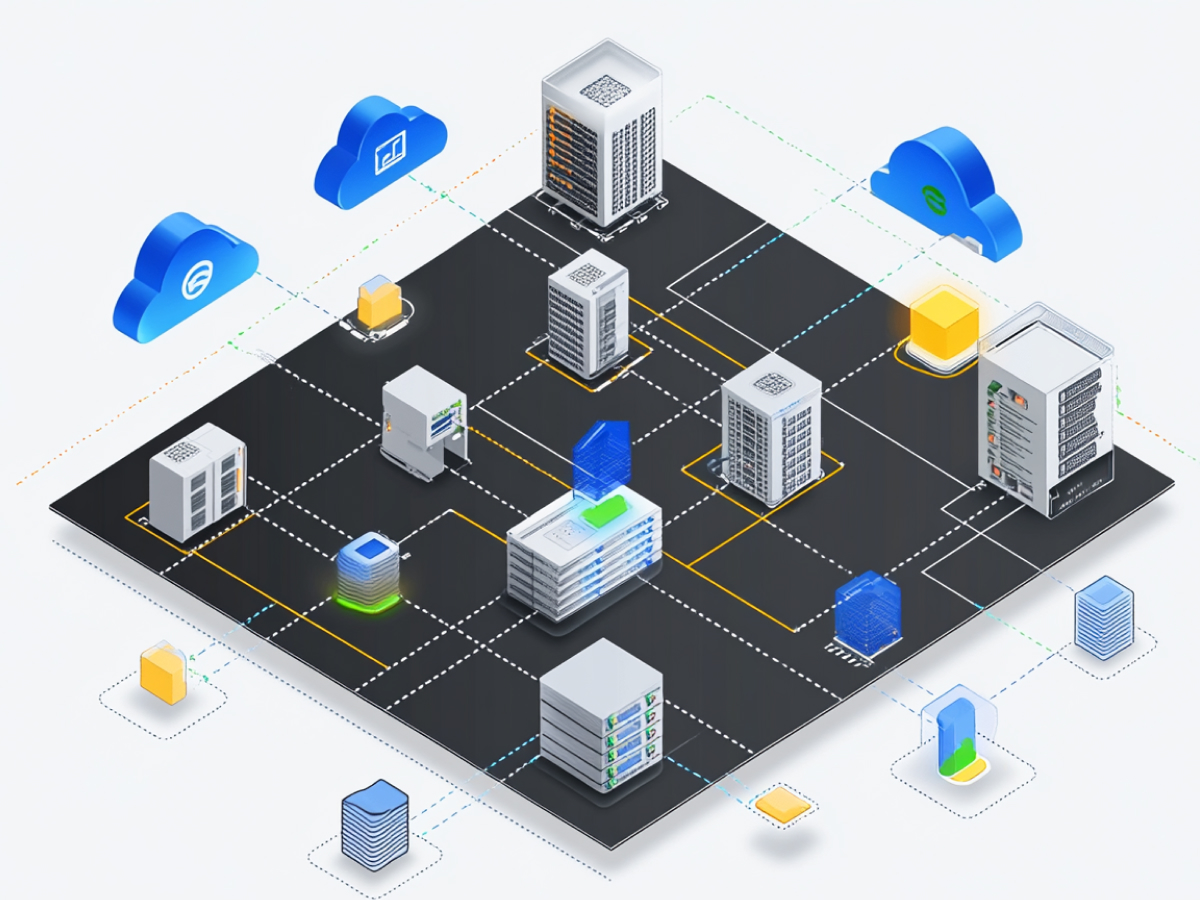

You’ve probably heard the term “decentralized mesh hyperscaler” tossed around. Sounds complex, but the idea is simple. It’s about putting the workload where the data is, cut down the lag, get faster results. Instead of sending all the data to a central cloud server and waiting for it to process, you let a distributed group of nodes handle the work. These nodes communicate with one another directly, no hierarchy, no waiting for top-down orders.

This architecture creates a network that’s self-healing and resilient. You get high availability and fault tolerance right out of the box. If one part of the network fails, the others adjust. This decentralized setup is not some science experiment, it’s already being applied in edge computing, wireless communication, and IoT. Wherever speed and system uptime matter, this model makes real sense. It reduces latency, scales fast, and performs under load.

If your applications are hitting the ceiling on speed and scalability in traditional cloud models, decentralized mesh approaches are built for your next challenge. Just make sure your organization has the right skills to handle the transition, because while the system is powerful, operating it requires a new way of thinking.

AI-driven workloads benefit from decentralized mesh architectures

AI doesn’t wait. Machine learning systems need to process data fast, and they need fail-safe compliance. Traditional cloud setups, built around centralized data centers, struggle with this. They’re often too slow, especially when handling live data from thousands or millions of sources. That’s where decentralized mesh architectures pull ahead. By processing data closer to where it’s generated, these systems give AI the infrastructure it needs to be truly real time.

Take a self-driving car platform. Vehicles generate massive amounts of data every second, commands, sensor arrays, environmental feedback. You don’t have time to route that data all the way to a remote data center across the world. You need on-the-spot analysis. A decentralized mesh lets local nodes handle that computation, dramatically reducing latency and helping vehicles, and the systems that manage them, respond instantly.

Now scale that to other AI use cases, automated manufacturing lines, biometric access control, real-time translations. In all these areas, reduced lag equals enhanced performance. And if your systems rely on detecting and reacting faster than your competitors, decentralized computing isn’t optional, it’s mission-critical.

At the executive level, here’s what matters: decentralized mesh allows scalability without sacrificing speed. It answers the AI challenge not by making existing systems work harder, but by redesigning where and how workloads function. If you’re serious about staying ahead in AI, your infrastructure has to evolve, fast, decentralized, and built for aggressive performance.

Efficient resource utilization and energy-conscious operations

When you decentralize, you reduce waste. Traditional cloud setups often run with over-allocated capacity. Resources are reserved “just in case,” which results in idle hardware consuming energy for no productive purpose. Mesh computing changes the game here. By distributing workloads across nodes and using what’s needed when it’s needed, utilization rates improve. You get more output per unit of energy and lower overall system waste.

This has a direct impact on both operating expenses and environmental considerations. For companies under regulatory or shareholder pressure to adopt more sustainable practices, mesh computing offers a meaningful step toward cleaner operations, without sacrificing performance. More efficient infrastructure planning means you don’t have to overbuild to ensure availability. You simply tap into distributed capacity when demand spikes and release it when it’s no longer needed.

To be clear, this isn’t about making small gains. We’re talking about tangible reductions in energy use, server overhead, and long-term capital costs. Your infrastructure footprint gets smarter, not just bigger. From an executive perspective, that translates into greater ROI on every compute cycle, and a stronger position when it comes to meeting sustainability targets without compromising scale or speed.

Increased operational complexity can be a challenge

Now for the trade-off: it’s not simple. Managing workloads across a distributed mesh of nodes introduces real complexity. When you remove the central point of command, you need coordination strategies that can handle distributed synchronization, maintain data consistency, and adapt in real time. Not all systems or teams are set up for this.

Each node in the network has to do its part. If communication between them breaks down or certain nodes become overloaded, you get latency, delays, and performance degradation. In effect, the benefits you’re counting on, speed, reliability, responsiveness, can quickly disappear if the architecture isn’t managed well. Every node becomes a critical part of the equation, and that adds operational overhead.

From a leadership standpoint, this means rethinking internal capabilities. Most organizations have teams that are used to centralized cloud systems. They know how to spin up a server in a region and manage from a dashboard. With mesh, the operational model is different. You need people who understand decentralized coordination, automated failover, and distributed consistency protocols.

There’s also compliance to handle. When data spans multiple nodes across regions, privacy and regulatory obligations increase. Duplication of data, increased storage usage, and differences in legal jurisdictions all have to be accounted for. This kind of complexity requires top-down commitment to build the right systems, and hire or train the right people. Without that investment, the mesh model won’t deliver its full potential.

A centralized cloud setup may be more practical

For many organizations, decentralized mesh systems aren’t necessary, at least not yet. If your workloads are stable, predictable, and not time-sensitive, then a centralized cloud setup is more efficient. Operating in a single region simplifies everything. You get consistent performance, fewer deployment headaches, and easier data governance.

When all your compute resources and data are in one location, you reduce the need for cross-node synchronization or complex coordination layers. That’s a big advantage for workloads like batch data analysis, archive storage, or scheduled AI training jobs. These workloads don’t need millisecond-level response times. They need reliability and predictable cost structures, both of which centralized systems provide extremely well.

It also makes compliance and regulatory control easier. When your data stays in one region, you don’t have to navigate conflicting international privacy laws or re-architect for local storage requirements. That’s a key consideration for industries dealing with sensitive data, finance, healthcare, and legal. Not every operation benefits from distribution. If your infrastructure needs are steady and your priority is operational simplicity, centralized cloud will outperform more distributed solutions in both speed of deployment and total cost of ownership.

Organizations must evaluate trade-offs

Decentralized mesh architecture looks good on paper, but implementation isn’t automatic. It takes skilled teams, robust systems, and clear strategic intent. Organizations that approach it as a simple out-of-the-box upgrade will run into friction quickly. This architecture offers real performance advantages, but only if there’s operational readiness.

From a business leadership perspective, this is a question of alignment. Do your operational needs truly require this level of distribution? Do you have the internal expertise to maintain it? Have you accounted for the long-term support and training needed for scale? These are critical questions. Without the right foundation, mesh systems can introduce more cost than value.

Decentralized mesh environments also tend to be less forgiving. Errors or misconfigurations in one node can cascade quickly. Monitoring, orchestration, and fault resolution capabilities need to operate continuously and accurately. You’re not just deploying an advanced cloud infrastructure, you’re fundamentally redesigning how your organization handles compute, storage, and real-time data movement.

There’s a long-term play here. For companies operating at the edge of AI, IoT, or real-time services, the performance gains outweigh the added complexity. But those gains require sustained investment and a high level of institutional tech maturity. This isn’t about chasing innovation for its own sake. It’s about knowing when the complexity is justifiable, and when it isn’t.

Key takeaways for leaders

- Embrace proximity-based computing: Decentralized mesh architectures enable near-source data processing, reducing latency and improving scalability. Leaders should explore this model for operations requiring high availability and rapid responsiveness.

- Optimize AI infrastructure with mesh: AI workloads benefit from reduced lag and increased resilience when processed across distributed nodes. Executives should consider mesh computing for real-time applications where speed and fault tolerance are critical.

- Drive efficiency and reduce waste: Mesh computing improves resource utilization by tapping into underused assets on demand. Organizations aiming to reduce operational costs and meet sustainability targets can benefit from this flexible infrastructure model.

- Prepare for elevated complexity: Distributed mesh systems demand advanced coordination, real-time synchronization, and sophisticated oversight. Leaders must assess their internal capabilities before scaling this architecture to avoid degraded performance and compliance risks.

- Choose practicality over novelty when appropriate: Centralized cloud remains more suitable for stable, batch-driven, or non-urgent workloads. Decision-makers should use it to streamline compliance, reduce risk, and maintain operational simplicity where real-time performance isn’t essential.

- Balance innovation with organizational capability: While mesh systems offer performance advantages, they require high technical maturity and sustained investment. Leaders should align infrastructure choices with specific business goals, operational readiness, and ROI expectations.